A profile in The Atlantic describes how the algorithm developed by Eric Schwartz and Jacob Abernethy helped the city of Flint’s lead service line replacement program, led by B.G. (ret.) Michael McDaniel, find and efficiently replace the hazardous pipes. The article then details how the city’s replacement costs increased when the a private engineering firm took over the pipe replacement work.

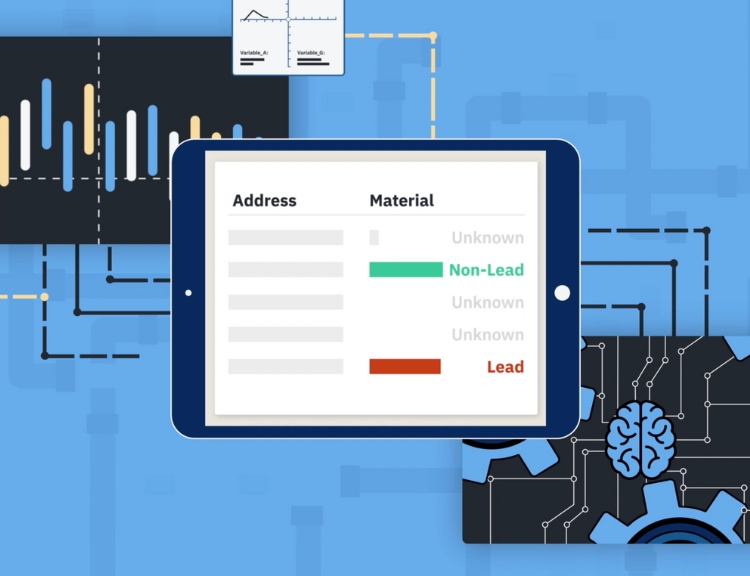

When Abernethy and his collaborator, the University of Michigan’s Eric Schwartz, got involved over the summer of 2016, they saw a familiar type of prediction problem: sequential decision making under uncertain conditions. The crews didn’t have perfect information, but they still needed the best possible answer to the question Where do we dig next? The results of each new dig could be fed back into the model, improving its accuracy.

Initially, they had little data. In March 2016, only 36 homes had had their pipes excavated. …

So the University of Michigan team asked Fast Start to check lines across the city using a cheaper system called “hydrovacing,” which uses jets of water, instead of a backhoe, to expose pipes. The data from those cheaper excavations went back into the model, allowing the researchers to predict different zones of the city more accurately.

As they refined their work, they found that the three most significant determinants of the likelihood of having lead pipes were the age, value, and location of a home. More important, their model became highly accurate at predicting where lead was most likely to be found, and through 2017, the contractors’ hit rate in finding lead pipes increased. “We ended up considerably above an 80 percent [accuracy] for the last few months of 2017,” McDaniel told me.